Why text-to-speech voices sound better on BeyondWords

Lots of text-to-speech service providers use AI voices from Amazon Web Services, Yandex, Microsoft Azure, and Google Cloud Platform.

But these voices sound best when you use them in BeyondWords.*

Why?

It’s thanks to our natural language processing algorithms.

Setting voices up for success

AI voices can interpret text in two formats: plain text, or speech synthesis markup language (SSML).

SSML tags provide extra information to the AI voice, clarifying pronunciations and improving speech flow. Using SSML therefore ensures a higher-quality voice output.

Some text-to-speech services only allow you to input plain text, meaning you can’t achieve higher-quality outputs through SSML.

Others, like Amazon Polly, give you the option to manually insert SSML tags. But this is complex and time-consuming. Let’s say the voice is mispronouncing "Joe Biden". Fixing this requires an understanding not only of SSML, but of the international phonetic alphabet (IPA)—symbols that linguists use to represent speech sounds.

<phoneme alphabet="ipa" ph="'dʒəʊ baɪdən">Joe Biden</phoneme>

This is not feasible for the majority of publishers.

BeyondWords, on the other hand, adds the SSML tags for you.

Whether it is imported from your website in HTML format or manually imported as plain text, your content is automatically converted into SSML before being processed by the AI voice.

This is made possible by a layer of natural language processing (NLP) algorithms, which can programmatically read, analyze, and interpret written language.

How our NLP works

Our NLP uses a combination of rule-based and neural network–based techniques. Our deep learning models are trained on large data sets, which allow them to “learn” how humans convert particular text elements into speech and how this differs depending on context. This is particularly useful for resolving ambiguities in text.

For example, in the sentence "I read the book", our NLP identifies, through contextual features, that the homograph "read" is most likely being used in the past tense, and so should be pronounced like "red" (/rɛd/) as opposed to "reed" (/riːd/). It applies the <phoneme> SSML tag accordingly, ensuring the AI voice gives the correct output.

<s>I <phoneme alphabet="ipa" ph="'rɛd">read</phoneme> the book yesterday.</s>

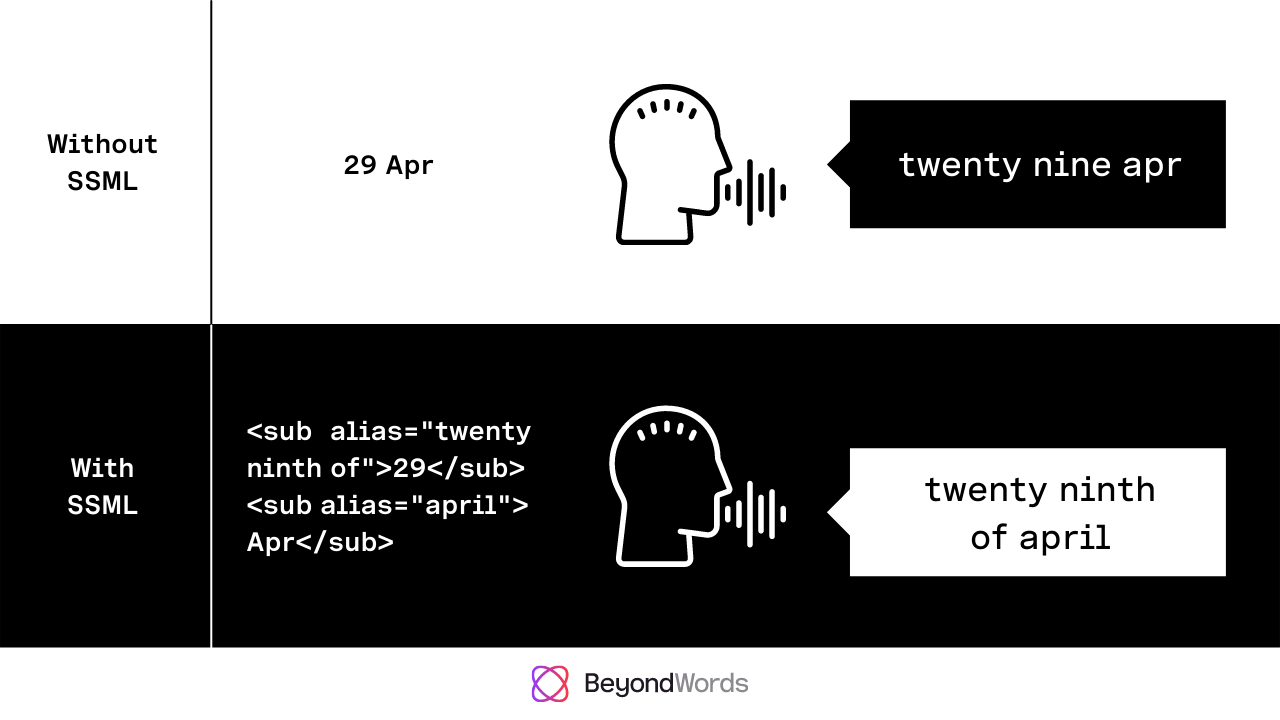

Also consider non-standard elements, such as dates. Our system can determine whether to read a number as cardinal (e.g. twenty nine) or ordinal (e.g. twenty ninth) numeral based on the usage context and other features.

Without this NLP and SSML layer, the AI voice may not predict which pronunciation of an element is correct and simply output a naive “best guess”. This is why many text-to-speech systems struggle with ambiguities.

A customizable and evolving NLP

Our team of computational linguists make iterative and domain-specific improvements to the NLP. This means that voice outputs evolve even when the AI voices behind them stay the same, and can adapt to the needs of each BeyondWords user.

Take the abbreviation "NLP", for instance. In the context of this article, it refers to "natural language processing". But it is also the airport code for Nelspruit, South Africa. In medicine, it can be shorthand for ‘no light perception’. With custom-built text normalization rules, we can ensure that our system delivers the most relevant result for a particular publisher.

Text-to-speech providers without a post-optimization NLP layer have no way to efficiently extend or make domain-specific customizations to an existing voice. If they wish to correct conversion errors, they must retrain the voice itself — something that comes at great cost and cannot guarantee accuracy, especially when it comes to unusual and idiosyncratic text-to-speech conversions.

Here are some more examples of what our NLP can do:

- Apply

<phoneme>SSML tags to ensure correct pronunciation of novel or complex words - Apply

<lang>SSML tags to ensure foreign words are pronounced accurately - Apply

<sub>SSML tags to ensure symbols, abbreviations, and acronyms are pronounced properly - In HTML:

- Identify tweets embedded within ‘blockquote’ elements with ‘twitter-tweet’ attributes, then fetch, clean, and read the content of the tweet

- Remove elements that shouldn’t be read aloud, such as image captions, so that they’re not processed into audio

All of this happens automatically, and has very little impact on production speeds—your text will typically be processed into audio within a couple of minutes.

The full package for digital publishers

BeyondWords is a popular alternative to Amazon Polly, Google Read Aloud Player, and other text-to-speech service providers not only because of our customizable NLP and automatic SSML tagging, but because of our audio CMS.

While our CMS integrations and Text-to-Speech Editor make it easy to create audio, our embeddable players, shareable URLs, and podcast feed make distribution near effortless. Publishers also have the option to monetize and analyze their audio.

And while you will have unlimited access to advanced AI voices from Amazon, Microsoft, Yandex, and Google, you also have the option to create a custom voice.

* Compared to non-SSML text-to-speech.