The Importance of Text Preprocessing in TTS

*This post is read by Tony (standard voice) and Maria (premium voice).

Text-to-speech (TTS) is the artificial production of human speech based on input text. TTS can be viewed as a sequence-to-sequence mapping where text gets transformed into one of its many possible speech forms.

However, this process is not as straightforward as it might seem. Any user of early sat nav systems should be able to recall numerous instances when the technology mishandled pronunciations, often resulting in an embarrassing slip. Nuances in pronunciation become particularly important when dealing with delicate news material or financial research.

The main challenge of perfecting TTS lies in the nature of text itself, as it is inherently ambiguous and under-specified with regards to many aspects of speech.

In this post, we outline the key challenges presented in processing input text and describe how a Text Preprocessor can help.

Ambiguity in written language

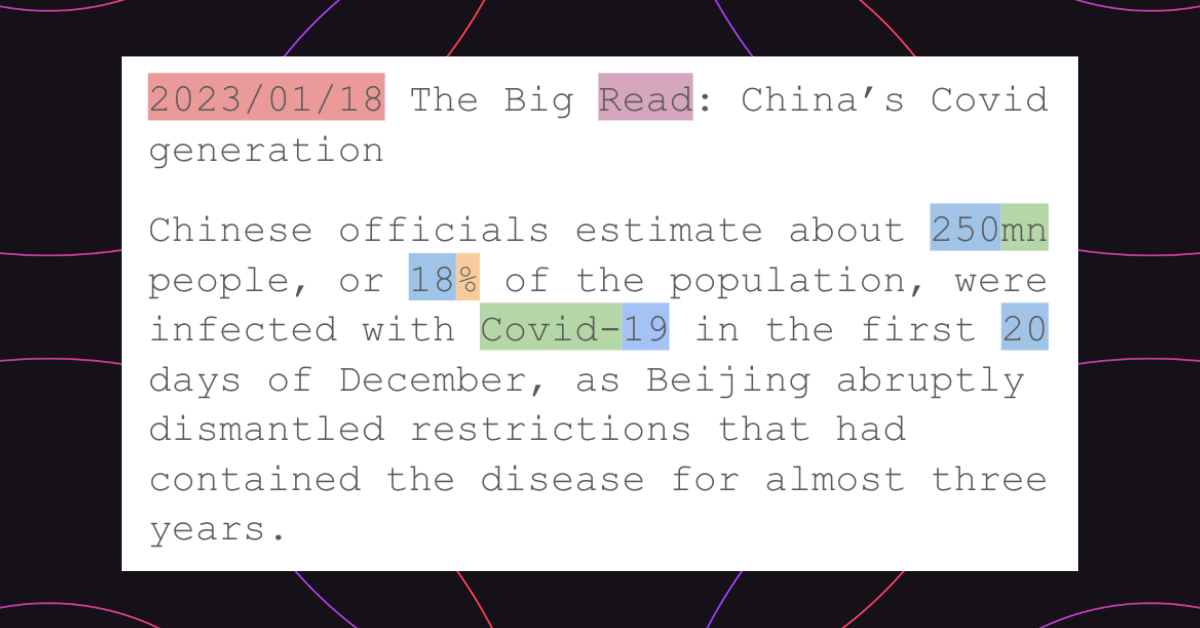

We can roughly categorize text into 1) natural language and 2) non-standard words (NSWs). Loosely speaking, natural language is any text that can be read out loud immediately, whereas NSWs have to be verbalized first. For example “April first” can be read out loud as is, whereas “1st April” has to be converted into its natural language form first, before it can be read out loud, e.g. “April first” or “first of April”.

There are many types of NSWs that need conversion, such as dates (“01/01/2001” into “January the first two thousand and one”), currencies (“$100” into “one hundred dollars”) or units (“10m” into “10 meters” or “10 million”), just to name a few.

But even text without NSWs might require some form of disambiguation. For example, abbreviations need to be expanded into their natural form, like “St.” into “Street” or “Saint”. Another example are acronyms and letter sequences which can be read as words (such as “NASA”) or by pronouncing each letter separately (such as “FBI”).

The process of disambiguating and expanding natural language and NSWs is commonly referred to as Text Normalisation. The output of which can be interpreted as a sequence of graphemes - letters that represent sounds in a written language.

Ambiguity with respect to pronunciation

The process of normalizing text into a sequence of graphemes removes some of the ambiguity, albeit not fully.

Take heteronyms, i.e. words which are spelled the same but pronounced differently depending on the context they appear in. For example, “lead” can refer to being in charge, in which case it's pronounced like ”leed”. However, “lead” can also refer to the metal, in which case it is pronounced like “led”.

Pronunciation can also vary based on regional or personal preferences. For instance, the word “either” can be pronounced as “ee-thur” or “eye-thur”. Or consider the brand name “Nike”, which in the US is commonly pronounced “nai-kee”, whereas in other parts of the world people might say “nyk”.

Furthermore, unknown words can appear over time, such as “Gif” a few decades ago. Some people use a hard “g” (like “gift”), while others use a soft “g” (like “giraffe"). Sometimes these new words deviate from existing orthographic rules, which makes inferring their pronunciation challenging.

The examples above motivate the need to better specify the intended pronunciation of input text. This is commonly achieved with Phonemic Transcription, a process which transcribes the grapheme sequence into a sequence of phonemes. Phonemes are the smallest units of sound that can distinguish one word from another, and are represented by special alphabets such as the International Phonetic Alphabet, IPA. With hundreds of symbols it allows for more granular control than mere grapheme-based input.

Ambiguity with respect to various aspects of speech

Even after Text Normalisation and Phonemic Transcription, a reader may often have little information about many of the aspects of speech, such as timbre, which characterizes who the speaker is (e.g. their age) or where they come from (e.g. their regional accent or dialect).

It is often unclear how a given text should be read out loud in terms of prosodic aspects, such as rhythm, stress and intonation. Also other aspects, such as expressiveness (e.g. emotion) or paralinguistic aspects (e.g. laughs and sighs), are mostly unspecified.

As a matter of fact, the individuality of speech patterns is so unique that one could argue that there are as many languages as there are individuals.

In neural TTS, many of the aspects above are collectively coined the Variation Information of the speech signal, which loosely can be thought of as all the information that is missing in the phoneme sequence but necessary to produce natural sounding speech. Variation Information is commonly learned from data of one or more speakers in a process called acoustic modeling during Speech Synthesis.

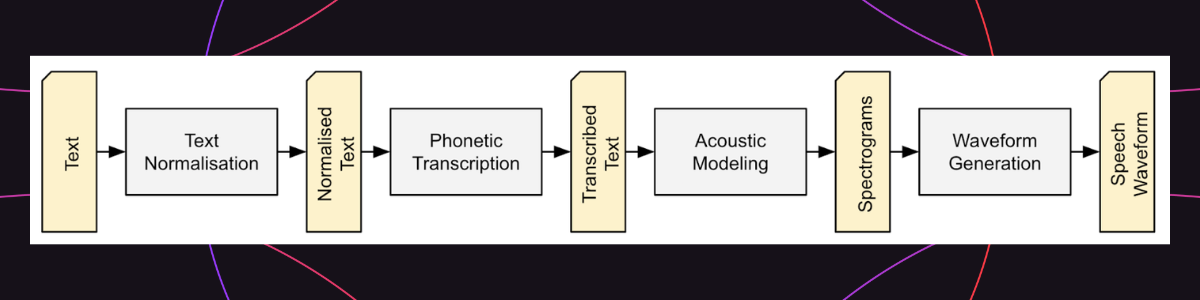

The Cascade Model

In summary, to tackle the different kinds of ambiguity, TTS practitioners commonly decompose the problem into the three distinct steps of Text Normalisation, Phonemic Transcription and Speech Synthesis, sometimes referred to as the “Cascade Model”.

Although end-to-end approaches exist, the cascade model makes the sequence to sequence mapping more tractable by first disambiguating the input text and better specifying the intended pronunciation, before inferring the Variation Information and generating the speech waveform.

The Importance of a Text Preprocessor

Text Normalisation and Phonemic Transcription are often deployed as components in a system called a Text Preprocessor. Although much focus and attention is usually devoted to Speech Synthesis, The Text Preprocessor is an indispensable part of any production-grade TTS system for various reasons.

First of all, data is sparse and collecting a dataset that captures all the diversity of human speech is expensive. Second, a failure to normalize and transcribe input text in a value-preserving manner can result in bad user experience, sometimes referred to as “catastrophic failures”. And third, not everything can be learned from data. In many cases there are no universally correct answers and to disambiguate pronunciation variants would means to take user preferences into account.

A Text Preprocessor helps with the challenges above by (1) removing the need to learn many of the aforementioned disambiguation tasks from data; (2) enabling the use of deterministic rules to guarantee value-preserving normalization and pronunciation and (3); offering user-level control to customize pronunciation variants according to user preferences.

In summary, in scenarios when user-level control and specific speech output is required, a Text Preprocessor meets the challenge of data sparsity, catastrophic failures and lack of consensus. Even with Large Language Models on the rise, a text-analysis system is likely to remain an essential component of any high caliber TTS service.

To learn more about Text Preprocessing at BeyondWords, or to book a call with one of our sales team, say hi at [email protected].